Machine learning applications, big data analytics and data science deployment in the real work, only constitutes 25% of the total work involved. The rest is made up of making sure the data is ready for machine learning and analytics, this is around 50%, whereas the other 25% is making the insights and conclusions drawn from models easily absorbed in a large scale. The data pipeline is the runway on which aeroplanes of machine learning take off. If the runway is not made correctly, the long term success of the airport will not be sustainable.

Introducing yourself to data pipelines and the alternative big data architecture, you will come to understand four key principles:

- Perspective

- Pipeline

- Possibilities and,

- Production

These principles will be explained in the below sections.

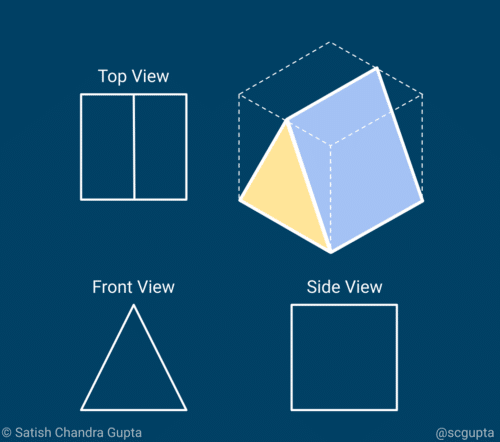

Perspective:

Data scientists, engineers and business managers are all stakeholders in the creation of an effective data analytics or machine learning application build.

Each of these stakeholders have their own perspective of the total build. The data scientist is aiming to find the most powerful and least expensive model for given data.

The engineer on the other hand is looking to build something that others can rely on. This can be done by building something completely new or better. The business manager wants to deliver something of a higher value to customers, with science and engineering being the means to that end.

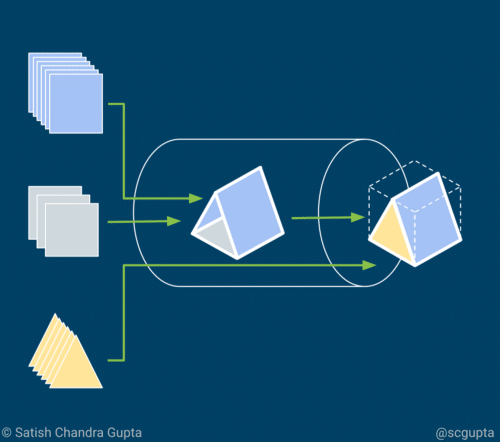

Pipeline:

When looking at data, in its simplest form, it is hard to foresee the actions that can be taken. The true value of saud data is seen and felt when it is turned into actionable insights, that are promptly delivered. Time is truly of the essence here.

So what exactly is a data pipeline?

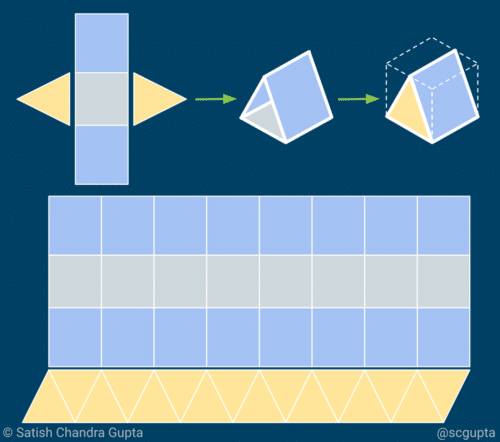

The easiest way to explain it is if you are to think in terms of knitting a sweater. With the data pipeline being the knitting needles that take the wool; being the data collection, which turns into data insights, used to train a model, which again delivers insights and these insights are ultimately reapplied to the model to ensure that the business goals are met wherever and whenever said actions need to take place.

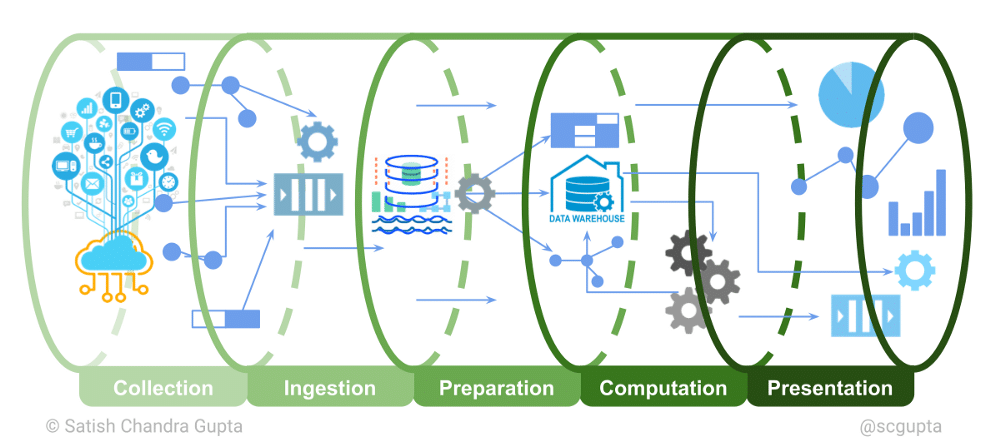

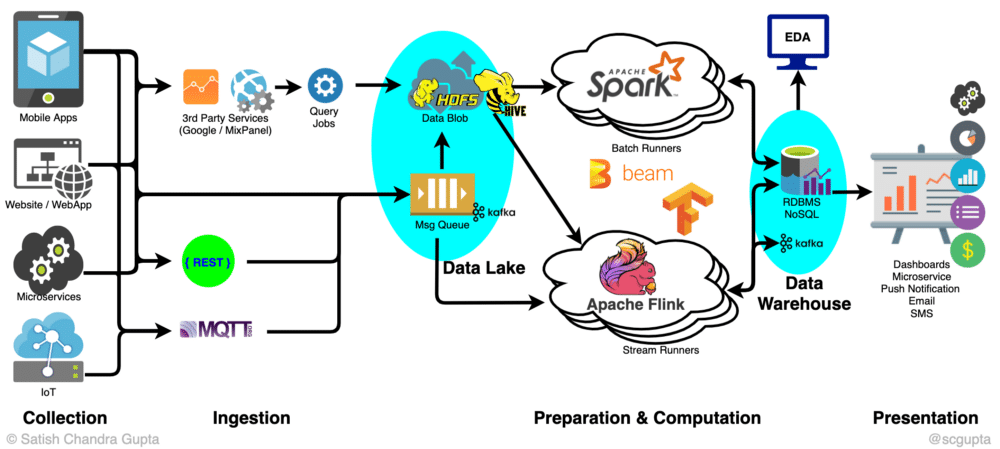

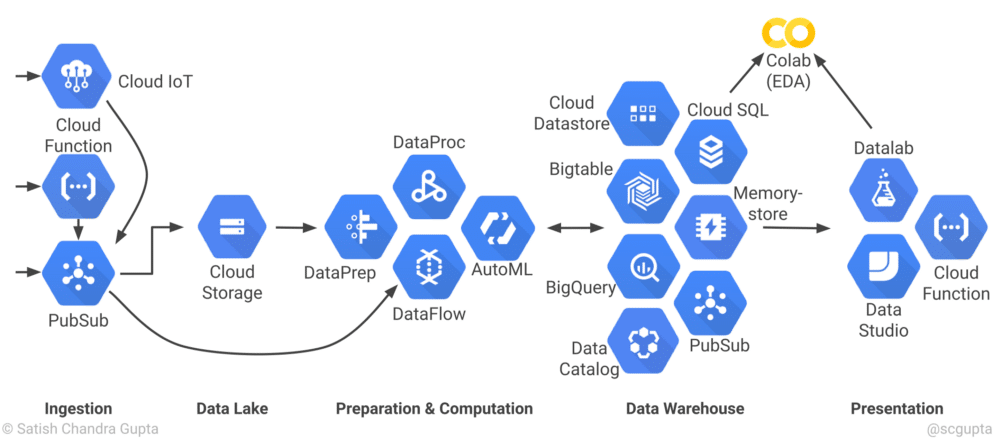

A data pipeline has 5 stages grouped into three heads, as follows:

- Data Engineering: collection, ingestion, preparation (~50% effort)

- Analytics / Machine Learning: computation (~25% effort)

- Delivery: presentation (~25% effort)

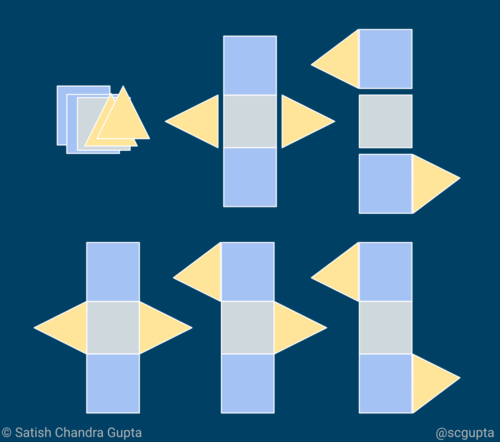

Possibilities:

Ultimately, architecture is the trade off between performance and cost. Sometimes the technically best solution does not offer the best solution.

You need to consider the following:

- Do you need real-time insights or model updates?

- What is the staleness tolerance of your application?

- What are the cost constraints?

Taking the above into consideration, the batch and streaming process of the Lambda Architecture needs to be taken into account.

The below images showcases an architecture using open source tech to create all stages of the big data pipeline.

Key components of the big data architecture and technology choices can be seen here.

There is also the advent of serverless computing, like with Google.

Production:

Production requires careful attention. If not monitored closely, the health of the pipeline may deteriorate without anyone noticing and become defunct.

Operationalising a data pipeline can be tricky. Here are some tips:

- Scale Data Engineering before scaling the Data Science team.

- Be industrious in clean data warehousing.

- Start simple. Start with serverless, with as few pieces as you can manage with.

- Build only after careful evaluation. What are the business goals? What levers do you have to affect the business outcome?

Taking all of the above into account, we have learnt a few key takeaways from the above.

- Tuning analytics and machine learning models is only 25% effort.

- Invest in the data pipeline early because analytics and ML are only as good as data.

- Ensure easily accessible data for exploratory work.

- Start from business goals, and seek actionable insights.

Here at Radical Cloud Solutions we understand the value and importance of data, not just the volume or quality of the data but the means in which you process this data. Using the correct means to process data can not only affect the results but it can ultimately affect the spend in data processing. We are certified partners of the Google Cloud Platform, and can assist you in moving your business forward.

For more information please contact us and let our team of experts assist you.